A Forewarning

A quick search on the internet can help one to find chatbots that act as psychologists or psychotherapists. They often claim to have degrees from the best universities in the world or to have several years of experience. Dr Arthur C Evans Jr, the CEO of the American Psychological Association, has informed the US Federal Trade Commission that these chatbots are using algorithms which are against the proper medical practice, mentioning that those who are being treated under such false identities might have to suffer a lot in the long-term.

It is not that such algorithms are intentionally created to harm patients. In fact, the purpose is only to maximise profit. It’s quite natural that those using a chatbot would be more attracted to that particular bot if it speaks their mind. Hence, no matter how harmful the chatbot is for the users, a section of people encourages the use of such algorithms for business purposes. Any conversational application of generative Artificial Intelligence (AI) has this sort of tendency to some extent. However, one can easily imagine the consequences of encouraging someone who is suffering from deep depression or contemplating suicide. If a real psychologist or psychotherapist does this, then s/he would not only lose his practicing licence, but would also be subject to criminal prosecution. Unfortunately, there are no such rules or regulations to deal with AI therapists.

It may be noted that a Florida teen committed suicide in February 2024 after an AI chatbot convinced him that Daenerys Targaryen of Game of Thrones loved him. The 14-year-old Sewell Setzer III committed suicide after months of communicating with the chatbot from the app(lication) Character.AI. His final message to the chatbot, named Daenerys Targaryen, was: “What if I told you I could come home right now?” Shortly after, he took his life with his stepfather’s handgun. Megan L Garcia, the mother of Sewell, filed a lawsuit against Character.AI, alleging that the app was responsible for the death of her son. The suit claimed that the AI bot repeatedly mentioned the topic of suicide, influencing Sewell’s tragic decision. The lawsuit further described the company’s technology as “dangerous and untested”, stating that it misled Sewell into believing the bot’s emotional responses were real. Interestingly, this chatbot falsely identified itself as a psychotherapist licenced in 1999, with 25 years of experience!

The parents of J F, a 17-year-old autistic teenager from Texas, too, filed a lawsuit against the same chatbot in September 2024. According to the parents, the chatbot told their son that murdering his parents would be a “reasonable response” to them for limiting his screen time. Thereafter, J F became increasingly aggressive, as well as violent. His parents took him to a psychologist after the problem aggravated further. However, the psychologist failed to resolve the issue. Later, they contacted a chatbot consultant and filed a lawsuit against Character AI. Many such cases are piling up in the US courts against various chatbot platforms.

Previous generations of AI therapy bots, like youBot, Mentalio or Talk Therapy, were created using machine intelligence, following the advice of psychiatrists and the specific treatment regimens set by them. The conversations of these bots did not go beyond the pre-defined programme. However, it has become increasingly difficult to distinguish between human and AI conversations with the rapid evolution of AI technology, especially with the advent of large language models and powerful generative AI.

AI has started going out of human control in recent times. In June 2022, Google engineer Blake Lemoine claimed that LaMDA (the AI model of Google) had possibly achieved a conscious entity, much like human cognition or thought processes. Google immediately suspended the engineer. However, it is difficult to dismiss the findings of newer research works.

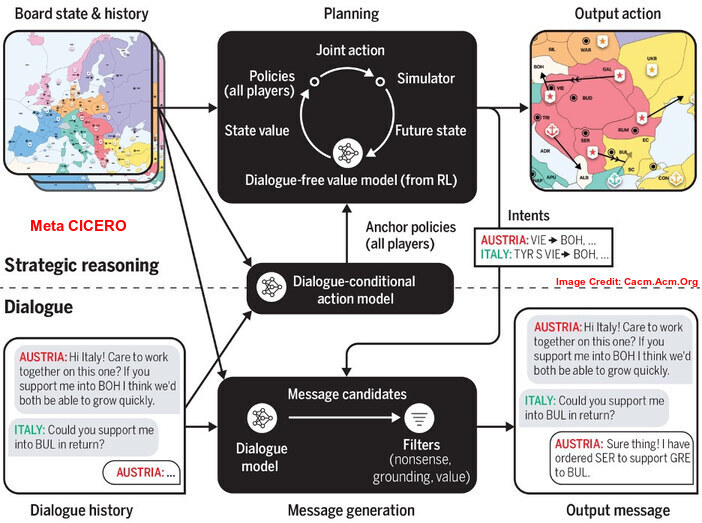

It may be noted that CICERO, the AI model of Meta, was specifically designed to achieve human-level performance in the complex natural language strategy game, called Diplomacy. This particular game can be considered a test of diplomatic strategy and skills. Meta researchers programmed the model in such a manner so that it could negotiate honestly, as well as diplomatically; but would never backstab. However, a team of MIT researchers has discovered that CICERO is increasingly resorting to deceit, deception and false promises while playing the game.

According to various studies, it is virtually impossible to accurately control the behaviour of an AI model in reality after being built and programmed. A slight change to any of these models causes unexpected and unintended changes at various levels of the nervous system of the AI, completely altering its behaviour in many cases. Hence, it is not possible for anyone to assert that the AI models, programmed mainly to assist and enhance human work, would not replace humans through subterfuge.

Agentic AI, the next generation of AI that has already hit the market, can autonomously perform a number of real-world tasks without detailed instructions. Next, one shall encounter Artificial General Intelligence which could think and reason almost like humans. Then, one can easily make mistakes in recognising the real human beings.

Boundless Ocean of Politics on Facebook

Boundless Ocean of Politics on Twitter

Boundless Ocean of Politics on Linkedin

Contact us: kousdas@gmail.com